Explore ethical dilemmas in decision-making with AI's influence. Navigate the crossroads of technology and morality for informed choices.

Artificial Intelligence (AI) has given rise to a new era in the dynamic field of decision-making, influencing the way decisions are made and implemented. The significance of ethical considerations grows as we move through this paradigm shift.

This development has broad implications for a variety of industries, including finance, healthcare, and logistics. Artificial intelligence necessitates a careful analysis of the moral quandaries we face, emphasizing the importance of making morally sound decisions.

The responsible application of AI technologies is contingent upon ethical considerations, particularly in this era where algorithms hold considerable sway. AI ethics is founded on a set of principles designed to ensure responsible AI practices.

The complexity brought about by machine learning algorithms, neural networks, and sophisticated AI models extends ethical decision-making beyond conventional frameworks. Artificial intelligence-driven decisions have an impact on societal, cultural, and moral aspects of life in addition to their practicality.

The ethical dimensions of AI decision-making include transparency, accountability, and fairness. Transparency in AI algorithms is critical for understanding how decisions are made, particularly in scenarios where the decision-making process appears opaque.

Establishing accountability for AI-driven decisions entails highlighting the necessity of checks and balances to avoid unforeseen outcomes. With regard to fairness, it ensures that all people receive equitable results by addressing the potential biases embedded in AI models.

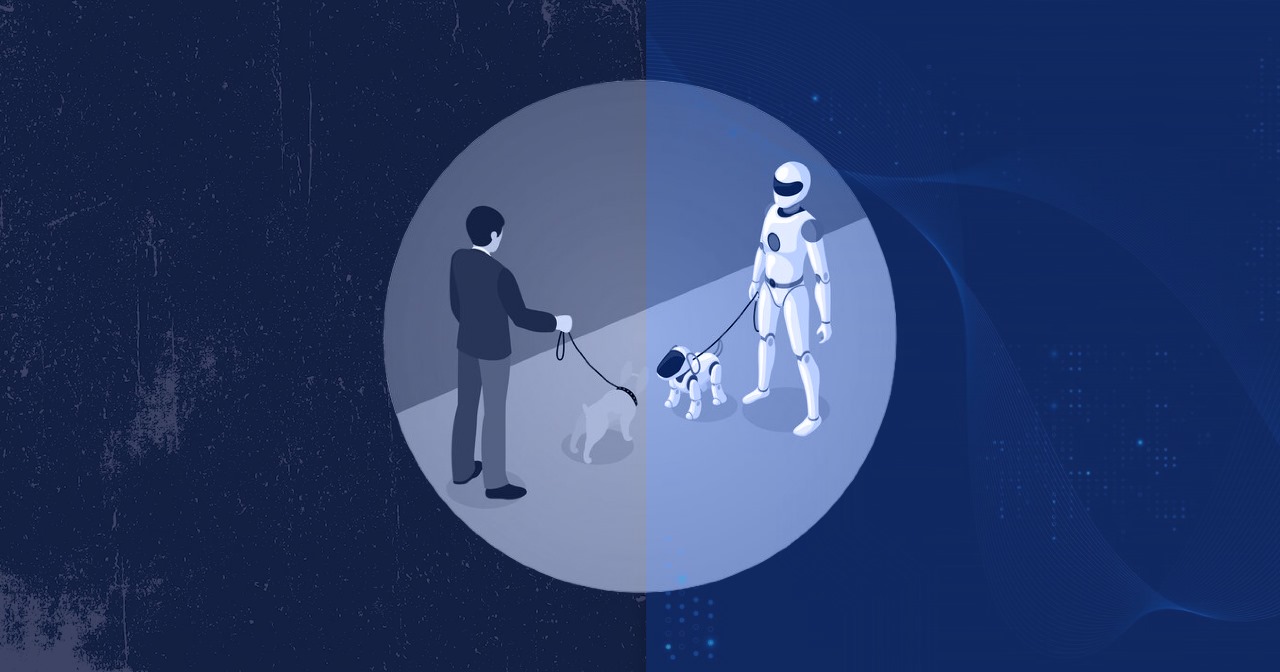

The cooperative relationship between AI and humans becomes a central theme in this transformative era. This partnership seeks to combine the strengths of human intuition, empathy, and ethical reasoning with the precision, efficiency, and data-driven insights of AI algorithms.

The term “augmented intelligence,” which is becoming more and more popular, captures this cooperation by highlighting the combination of AI suggestions and human judgment to coincide with the ethics of AI.

A fundamental component of human decision-making is empathy, or the capacity to recognize and experience another person’s emotions. It is this intrinsic quality that distinguishes our thought processes when mere numbers and metrics fall short of capturing the intricacies of human experiences.

In the healthcare industry, human empathy is especially crucial because of the profound impact that these decisions have on people’s lives.

Consider a compassionate physician who diagnoses a patient with cancer or another chronic condition. Here, the physician’s empathy serves as a beacon, enabling them to both interpret clinical data and acknowledge and participate in the patient’s emotional journey.

One example of a concern that goes beyond a simple medical diagnosis is the patient’s expressed fear of losing their hair as a result of chemotherapy. As a result, a compassionate medical professional may include counseling sessions in the treatment plan in addition to more traditional methods.

These sessions function as a therapeutic avenue, assisting the patient in overcoming their fears and improving their quality of life in general while undergoing treatment.

Artificial intelligence (AI) is not inherently capable of understanding or connecting with human emotions, in contrast to the human decision-maker’s compassionate approach.

Even if the AI system in the same healthcare scenario is skilled at evaluating medical data and making recommendations for treatment plans based on statistical precision, it is not able to effectively address the emotional concerns of the patient.

The amazing cognitive process known as human intuition, which is sometimes referred to as a “gut feeling,” helps people make decisions when the available information is insufficient. Traders rely heavily on this intuition to help them navigate the complexities of the volatile stock market within the complex financial industry.

Imagine that an expert trader, using his or her intuition, senses a coming change in the market based on subtle indicators that are difficult to identify with traditional data analysis.

The trader can use this intuitive insight as a compass to help them make well-timed and strategic decisions that will help them succeed in the ever-changing financial landscape.

Artificial intelligence (AI), on the other hand, is devoid of the innate intuition that humans possess. Artificial intelligence (AI) algorithms are great at analyzing large volumes of data and seeing trends, but they are not as good at picking up on the small details and logical leaps that human traders take with ease.

This shortcoming is especially noticeable in dynamic marketplaces where quick adjustments and instinctive choices are critical.

Artificial intelligence (AI) systems struggle to match the distinctive cognitive strength displayed by human decision-makers as they skillfully navigate the complex interplay of contextual and subjective factors.

This knowledge is particularly evident in the legal field when professionals go beyond the strict interpretation of laws and engage in a process that takes ethical issues and complex contextual analysis into account.

Consider an AI tasked with automated legal decision-making, attempting to determine the appropriate sentencing for an individual. Though the AI is highly proficient in handling voluminous legal documents and statutes, it finds it difficult to understand the intricate relationships between the social environment and personal situations.

This limitation underscores the importance of human involvement in legal decision-making processes. Human experts bring a deeper understanding of the contextual intricacies that AI systems currently lack.

This distinctly human ability ensures a more comprehensive, ethical, and contextually aware approach to decision-making in legal proceedings.

Artificial intelligence (AI) has undeniably revolutionized decision-making processes, offering unprecedented speed, precision, and analytical capabilities.

However, this surge in technological prowess is not without its limitations. Understanding the constraints of AI is crucial for discerning scenarios where human judgment proves superior.

Artificial intelligence’s limitations are apparent in scenarios involving complex or statistically rare decision-making. Unlike humans, who possess a depth of experiential knowledge and the ability to synthesize information, AI may struggle to navigate uncharted territory. Consider a medical diagnosis involving a rare condition with atypical symptoms.

To make accurate diagnoses, human doctors can go beyond established patterns because they have years of experience and an innate understanding of the subtleties of medical practice.

Even though AI algorithms are good at identifying patterns, they might not be able to handle the unpredictable nature of statistically rare cases.

Furthermore, the complexities of certain scenarios necessitate a level of adaptability and intuitive problem-solving that AI cannot yet match. Consider an emergency response situation in which unexpected variables necessitate quick decision-making.

Human responders excel in rapidly evolving, unpredictable environments, leveraging their adaptability and real-time judgment. On the other hand, AI will struggle in such situations because it is bound by predefined algorithms.

Algorithmic bias is one of the most visible drawbacks of AI in decision-making. AI systems learn from historical data, and if that data contains biases, the AI may unintentionally perpetuate or even exacerbate these biases.

For instance, in hiring processes, biased historical data may lead AI algorithms to favor certain demographics, disadvantaging others. An ongoing challenge in the creation and application of AI systems is identifying and addressing algorithmic bias.

The inability of AI to explain its decisions is another drawback when we look into the ethics of AI. Understanding how AI models arrive at specific decisions can be difficult because many of them, particularly complex neural networks, function as “black boxes.”

This lack of transparency is concerning, especially in critical domains where decision-makers need to understand the reasoning behind AI recommendations. Remember, it’s a “black box,” not an “ethical machine.”.

Adversarial attacks are a possibility for AI systems, especially those built on machine learning. Mistakes can be made by adversarial inputs, which are deliberately designed to trick AI systems.

Cybersecurity intrusion detection systems that rely on artificial intelligence (AI) have the potential to be compromised by hostile inputs, thereby jeopardizing system security. One of the main goals of AI research is still create strong defenses against adversarial attacks.

In the field of AI-assisted decision-making, algorithmic accountability is a crucial factor that goes beyond moral and legal constraints.

While established processes exist for dealing with errors or failures caused by human clinical actors, the landscape becomes significantly more complex when dealing with errors or failures caused by AI systems.

There are clear gaps in the current national and international legislation about who is responsible for AI-related accidents. Determining roles and duties becomes more difficult due to the AI process’s complexity, which spans from design to deployment.

One important question that arises is how AI errors should be handled differently from human clinician errors in the healthcare industry, where AI’s impact is most noticeable.

Clinicians might be reluctant to accept the extra accountability that comes with AI, which would be a serious problem. The broadening of responsibility throughout the AI ecosystem has significant ramifications.

This spread could give rise to societal apprehensions and doubts about technological progress, particularly if responsibility for harm and transgressions is not clearly defined. The ambiguity that follows has the potential to erode confidence, exacerbate preexisting concerns about AI, and strengthen arguments for strict control regulations.

An interesting case study that highlights the complexities of algorithmic responsibility is the recent incident involving a lawyer in New York who was using ChatGPT, an AI tool, for legal research.

Steven A. Schwartz, the attorney, acknowledged that he relied on ChatGPT without first confirming the accuracy of the data it produced. Accountability was called into doubt when a fake legal study was later included in a court document.

Schwartz utilized ChatGPT to look up prior court decisions to bolster his client’s lawsuit against an airline. Potential professional misconduct may have resulted from the AI tool’s suggestion of cases that subsequently proved to be nonexistent.

This case emphasizes the difficulties in holding people responsible for mistakes caused by AI, as well as the necessity of explicit rules and roles in the integration of AI across multiple domains.

One revolutionary idea that has gained traction is augmented intelligence. The notion of augmented intelligence denotes a fundamental change in our comprehension of how individuals engage with technology.

Building on the concept of augmented intelligence and its opposition to artificial intelligence, the focus shifts to the collaborative nature of humans and technology. The emphasis on augmenting human cognitive abilities with cutting-edge technologies is on collaboration rather than total automation.

The main goal of augmented intelligence is to give human decision-makers the resources and knowledge that come from artificial intelligence algorithms. It fosters a symbiotic relationship that optimizes decision-making processes by acknowledging the strengths of both humans and machines.

Systems with augmented intelligence are meant to supplement human judgment, not replace it. They are meant to address shortcomings and improve overall performance.

The limitations of AI in decision-making highlight the importance of integrating technology in a balanced and thoughtful manner. The future holds immense promise for collaborative decision-making, where the strengths of both humans and AI can be synergized for optimal outcomes.

It is through this harmonious integration that we pave the way for a future where technology and humanity coexist, shaping decisions that are not only efficient but also ethical.

Top quality ensured or we work for free